Prioritizes high-performance, low-latency data transmission without compromising security by seamlessly integrating robust safeguards throughout the entire AI development lifecycle

Providing real-time web content extraction and summarized content of Al Hybrid Search at lowest latency

Providing model hosting and end-to-end optimization, distributed inference services are available in 80+ countries

Ensuring data privacy with end-point encryption and providing localized storage across major global regions for compliance purpose

Providing object storage for AI training, reducing costs for data transfer in multi-cloud environments

A layer of control and protection for LLM applications

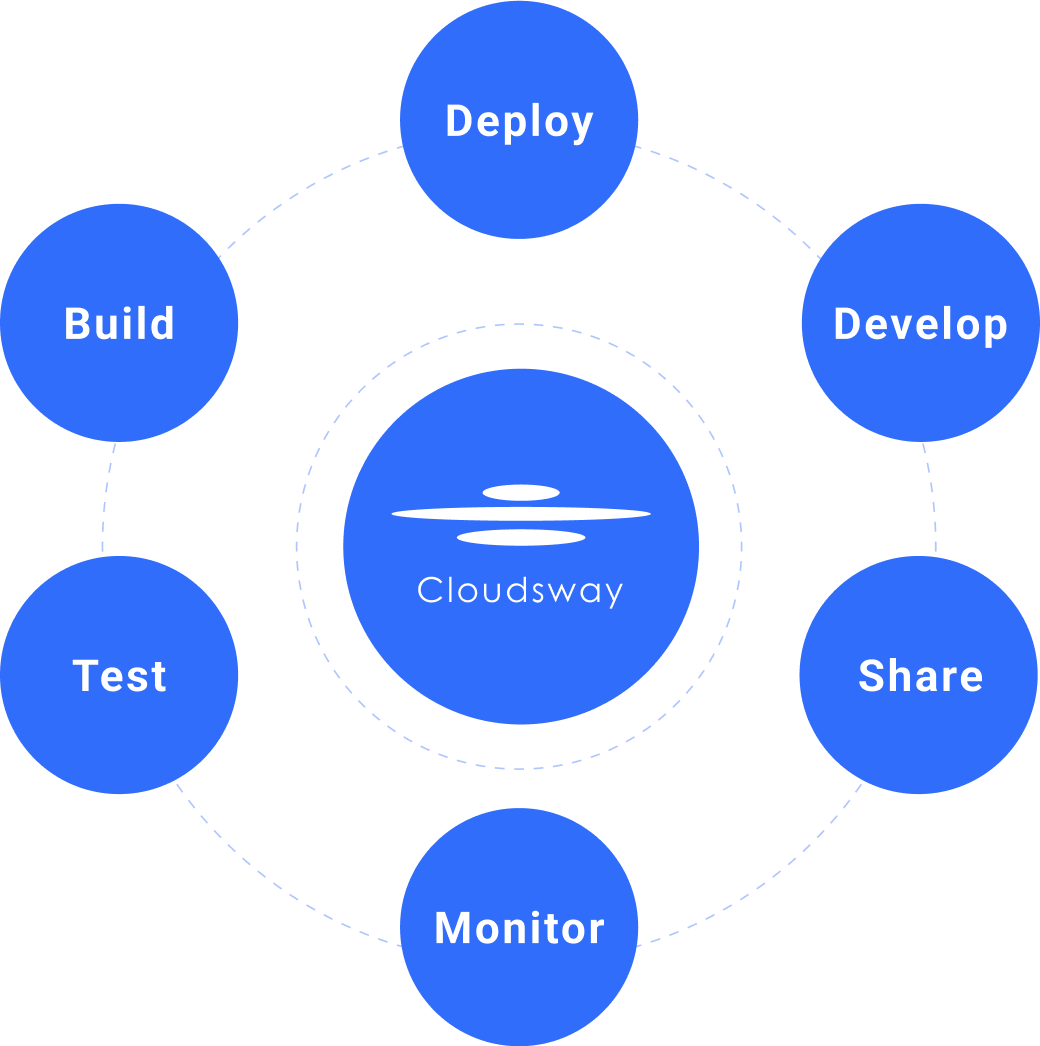

Manage and scale various gen AI workloads with Cloudsway AI platform.

Deliver rapid results with minimized latency ensuring models’ efficiency in real-world scenarios

6X

Faster

Via Cloudsway model engine

600

Token/s

Fast LLM Engine

30

Seconds

Fast deployment in seconds

99.9%

Avalability

Service uptime

The use of Cloudsway LLM Playground is subject to Cloudsway Terms of Use and Privacy Policy. The models featured in the LLM Playground may be subject to additional third-party license terms and restrictions, as further detailed in the developer documentation. The output generated by the LLM Playground has not been verified by Cloudsway for accuracy and does not represent Cloudsway’s views.

Plan smarter, scale confidently, and predict the costs associated with your AI workloads

Choose your models and enter an estimated size and daily traffic

Estimated Cost

| Model | Estimated Size In Millions | $4.00 | |

|

Add Models

|

|||

Scale your AI deployments globally with our cost-effective GPU infrastructure, designed for distributed computing and powered by the latest hardware

GB200

for massive-scale training at optimal performance

H100

for every stage of the model production and operation lifecycle

A100

for inference & fine tuning models with moderate loads

L40S

for fine-tuning in hours or train small LLMs in days

Core competencies to innovate on the cloud with simplistic operations and comprehensive experience

Integrated smart operations deal with complexities with ease

High-performance computation and high throughput storage meet cloud-native managed services for ultimate efficiency.

All-in-one platform integrated with open-source frameworks eliminating operation hassles.

Build, deploy, scale and co-sell your applications, we’ve got you covered.

Teamed up with leading minds, Cloudsway is the partner of choice for building and deploying cutting-edge solutions.

All Systems Operational

© 2025 All rights reserved.

To provide the best experiences, we use technologies like cookies to store and/or access device information. Consenting to these technologies will allow us to process data such as browsing behavior or unique IDs on this site.

Your AI journey starts here.

Fill out the form and we’ll get back to you with answers.